(Createquity’s summer rerun programming continues this week with a focus on arts research! This instant classic by Createquity Writing Fellow Katherine Gressel spread like wildfire when it was first published in January 2012, and remains our third-most popular post ever. It even brought us a bunch of new readers from Australia! [Long story.] While not a short read, it’s packed with useful information about how practitioners have gone about conceptualizing and evaluating one of the hardest beasts to measure – public art. -IDM)

Steve Powers, “Look Look Look,” Part of the “A Love Letter for You” project, commissioned by the Philadelphia Mural Arts Program, 2009-2010. http://www.aloveletterforyou.com

In the Spring/Summer 2011 issue of Public Art Review, Jack Becker writes, “There is a dearth of research efforts focusing on public art and its impact. The evidence is mostly anecdotal. Some attempts have focused specifically on economic impact, but this doesn’t tell the whole story, or even the most important stories.”

Becker’s statement gets at some of the main challenges in measuring the “impact” of a work of public art—a task which more often than not provokes grumbling from public art administrators. When asked how they know their work is successful, most organizations and artists that create art in the public realm are quick to cite things like people’s positive comments, or the fact that the artwork doesn’t get covered with graffiti or cause controversy.

We are much less likely to hear about systematic data gathered over a long time period—largely due to the seemingly complex, time-consuming, or futile nature of such a task. Unlike museums or performance spaces, public art traditionally doesn’t sell tickets, or attract “audiences” who can easily be counted, surveyed, or educated. A public artwork’s role in economic revitalization is difficult to separate from that of its overall surroundings. And as Becker suggests, economic indicators of success may leave out important factors like the intrinsic benefits of experiencing art in one’s everyday life.

However, public art administrators generally agree that some type of evaluation is key in not only making a case for support from funders, but in building a successful program. In the words of Chicago Public Art Group (CPAG) executive director Jon Pounds, evaluations can at the very least “help artists strengthen their skills…and address any problems that come up in programming.” Is there a reliable framework that can be the basis of all good public art evaluation? And what are some simple yet effective evaluation methods that most organizations can implement?

This article will explore some of the main challenges with public art evaluation, and then provide an overview of what has been done in this area so far with varying degrees of success. It builds upon my 2007 Columbia University Teachers College Arts Administration thesis, And Then What…? Measuring the Audience Impact of Community-Based Public Art.That study specifically dealt with the issue of measuring audience response to permanent community-based public art, and included interviews with a wide range of public artists and administrators.

This article will discuss evaluation more broadly—moving beyond audience response—and incorporate more recent interviews with leaders in the public art field. My goal was not to generate quantitative data on what people are doing in the field as a whole with evaluation (according to Liesel Fenner, director of Americans for the Arts’s Public Art Network, such data is not yet available, though it is a goal). Instead, I have reviewed recent literature on public art assessment, and interviewed a range of different types of organizations, from government-run “percent for art” and transit programs to grassroots community-based art organizations in New York City (where I am based) and other parts of the United States. I sought to find out whether evaluation is considered important, how much time is devoted to it, and the details of particularly innovative efforts.

The challenge of defining what we are actually evaluating

The term “public art” once referred to monumental sculptures celebrating religious or political leaders. It evolved during the mid-twentieth century to include art meant to speak for the “people” or advance social and political movements, as in the Mexican and WPA murals of the 1930s, or the early community murals of the 1960s-1970s civil rights movements. Today, “public art” can describe anything from ephemeral, participatory performances to illegal street art to internet-based projects. The intended results of various types of public art, and our capacity to measure them, are very different.

In the social science field, evaluation typically involves setting clear goals, or expected outcomes, connected to the main activities of a program or project. It also involves defining indicators that the outcomes have been met. This exercise often takes the form of a “theory of change.” Since there are so many types of public art, it is exceedingly difficult to develop one single “theory of change” for the whole field, but it may be helpful to use a recent definition of public art from the UK-based public art think tank Ixia: “A process of engaging artists’ ideas in the public realm.” This definition implies that public art will always occupy some kind of “public realm”–whether it is a physical place or otherwise-defined community—and require an “engagement” with the public that may or may not result in a tangible artwork as end result. This process and the reactions of the public must be evaluated along with whatever artistic product may come out of it.

The challenge of building a common framework for evaluation

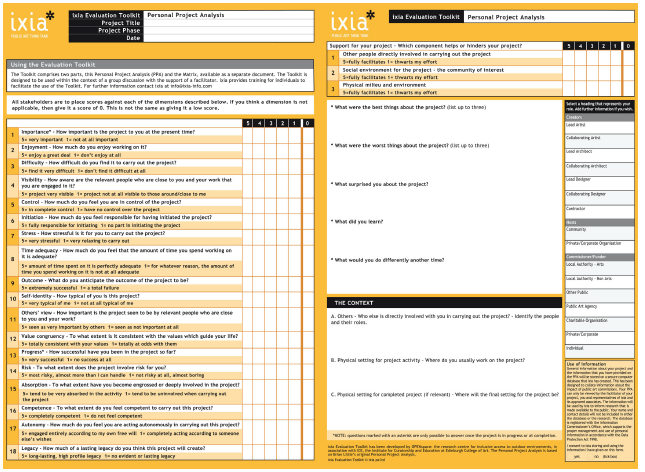

In 2004, Ixia commissioned OPENspace, the research center for inclusive access to outdoor environments based at the Edinburgh College of Art and Heriot-Watt University, to research ways of evaluating public art, ultimately resulting in a comprehensive 2010 report, “Public Art: A Guide to Evaluation” (see a helpful summary by Americans for the Arts). The guide’s emphasis and content was shaped by feedback from Ixia’s Evaluation Seminars and fieldwork conducted by Ixia and consultants who have used its Evaluation Toolkit. Ixia provides the most comprehensive resources on evaluation that I have encountered, with two main evaluation tools, the evaluation matrix and the personal project analysis. These are helpful as a starting point for evaluating any project or program.

The matrix’s goal is to “capture a range of values that may need to be taken into account when considering the desirable or possible outcomes of engaging artists in the public realm.” It is meant to be filled out by various stakeholders during a project-planning stage, as well as at the midpoint and conclusion of a project.

Ixia’s “personal project analysis”is “a tool for process delivery that aims to assess how a project’s delivery is being put into practice.” I will not analyze it in detail here, except to say that something similar should also ideally be part of any organization’s evaluation plan, as it allows for assessing how well the project is being carried out.

Ixia’s matrix identifies four main categories of values:

- Artistic Values [visual/aesthetic enjoyment, design quality, social activation, innovation/risk, host participation, challenge/critical debate]

- Social Values [community development, poverty and social inclusion, health and well being, crime and safety, interpersonal development, travel/access, and skills acquisition]

- Environmental Values [vegetation and wildlife, physical environment improvement, conservation, pollution and waste management-air, water and ground quality, and climate change and energy],

- Economic Values [marketing/place identity, regeneration, tourism, economic investment and output, resource use and recycling, education, employment, project management/sustainability, and value for money].

The matrix accounts for the fact that each public artwork’s values and desired outcomes will be different depending on the nature of the presenting organization, site, and audience.

It is unclear how widely these tools have been adopted in the UK since their publication, and I did not encounter anyone in the U.S. using them. Yet many organizations are employing a similar process of engaging various stakeholders during the project-planning phase to determine goals specific to each project, which relate to the categories in Ixia’s matrix. For example, most professionals I interviewed cited some type of “artistic” goals for the work. Some organizations prioritize presenting the highest quality art in public spaces, in which case the realization of an artist’s vision is top priority (representatives of New York City’s Percent for Art program described “Skilled craftsmanship” and “clarity of artistic vision” as key success factors, for example).

By contrast, organizations that include a youth education or community justice component may rank “social” or “economic” values higher. Groundswell Community Mural Project, an NYC-based nonprofit that creates mural projects with youth, asks all organizations that host mural projects (which may include schools, government agencies, and community-based organizations) in pre-surveys to choose their top desired project outcomes from a range of choices, as well as identify project-specific issues. Groundswell does have a well-developed theory of change behind all its projects, relating to the organization’s core mission to “beautify neighborhoods, engage youth in societal and personal transformation, and give expression to ideas and perspectives that are underrepresented in the public dialog.” However, some project-specific outcomes may be more environmental—for example, partnerships with the Trust for Public Land to integrate murals into new school playgrounds–while some relate to “crime and safety,” as in an ongoing partnership with the NYC Department of Transportation to install murals and signs at dangerous traffic intersections that educate the public about traffic safety.

Groundswell Community Mural Project, signs from “Traffic Safety Program,” a partnership between Groundswell, the Department of Transportation’s Safety Education program, and several NYC public elemenary schools. Lead artists Yana Dimitrova, Chris Soria, and Nicole Schulman worked with students to create these signs installed at locations identified as most in need of traffic signage.

Groundswell is just one example of many public art organizations that set goals at the outset of each individual project, based on each project’s particular site and community. While individual organizations may effectively evaluate their own projects this way, crafting a common theory of change for all public art may be an unrealistic expectation.

The challenge of reliable indicators and data collection

The Ixia report discusses the process by which indicators of public art’s ability to produce desired outcomes may be identified, with the following questions:

- Is it realistic to expect a public art project to influence the outcomes you are measuring?

- Is it likely that you can differentiate the impact of the public art project and processes from other influences, e.g., other local investment?

- Is it possible to conduct meaningful data on what matters in relation to the chosen indicators?

For example, in studies seeking to measure any kind of change, good data collection should always include a baseline—i.e., economic conditions or attitudes of people BEFORE the public art entered the picture. Data collection methods ideally should also be reliable, unbiased, and easily replicated.

The “Guide to Evaluation” does not go into detail about any concrete indicators of public art’s “impact.” Therefore, the matrix seems to be most useful as a guide to goal-setting. As the Americans for the Arts summary of this report points out, “Ixia directs users to [UK-based] government performance indicators as a baseline source, but that is where the discussion ends.”

Liesel Fenner of Americans for the Arts’s Public Art Network mentioned in an email to me that while PAN hopes to develop a comprehensive list of indicators in the future, which can be shared among public art presenters nationally, “developing quantitative indicators is the main obstacle.”

According to my interviews with both on-the-ground administrators and public art researchers, many busy arts administrators find the type of data collection recommended in Ixia’s guide difficult, costly and time-consuming. It can be a challenge to get artistic staff to buy into even basic evaluation; says one community arts administrator, “artists are paid for a their leadership in developing and delivering a strong project. Many artists don’t see as much value in evaluation because, in part, it comes in addition to the difficult work that they just accomplished.” It is also uncommon to spend precious training resources on something like quantitative evaluation techniques.

Some are of the opinion that even if significant time were spent on justifying public art’s existence by “proving” its practical usefulness, this would still be a losing battle that could lead to the withdrawal of support for public art, the production of bad art that panders merely to public needs, or both. One seasoned public art administrator asked me: “Is architecture evaluated this way? The same way public buildings need to exist, public art needs to exist. It’s people looking to weaken public art who are trying to ask these questions about its impact.”

The challenge of evaluating long-term, permanent installations

Glenn Weiss, former director of the Times Square Alliance Public Art Program and current director of Arts League Houston, posits that economic impact studies are “most possible with highly publicized, short-term projects like the Gates or large public art festivals.” Indeed, the New York City Mayor’s office published a detailed report on “an estimated $254 million in economic activity” that resulted from The Gates, a large installation in Central Park by internationally acclaimed artists Christo and Jeanne-Claude, based on data like increased park attendance and business at nearby hotels, restaurants, etc. However, most public art projects, even temporary ones, are not as monumental or heavily promoted as The Gates, making it difficult to prove that people come to a neighborhood, or frequent its businesses, primarily to see the public art.

Visitors crowd Christo and Jeanne-Claude’s “The Gates” (2005) in Central Park. Photo by Eric Carvin.

Weiss also believes that temporary festivals are generally easier to evaluate quantitatively than long-term public art projects. For example, during a finite event or installation, staff members can keep a count of attendees (some of the temporary public art projects I have encountered in my research, such as the FIGMENT annual participatory art festival on Governors Island and in various other U.S. cities, use attendance counts as a measure).

The few comprehensive studies connecting long-term, permanent public art to economic and community-wide impacts, conducted by research consultants and funded by specific grants, have led to somewhat inconclusive results. For example, An Assessment of Community Impact of the Philadelphia Department of Recreation Mural Arts Program (2002), led by Mark J. Stern and Susan C. Seifert of University of Pennsylvania’s Social Impact of the Arts Project (SIAP), cites the assumed community-wide benefits of murals outlined in MAP’s mission statement at the time of the study:

The creation of a mural can have social benefits for entire communities…Murals bring neighbors together in new ways and often galvanize them to undertake other community improvements, such as neighborhood clean-ups, community gardening, or organizing a town watch. Murals become focal points and symbols of community pride and inspiring reminders of the cooperation and dedication that made their creation possible.

Yet when asked to “use the best data available to document the impact that murals have had over the past decade on Philadelphia’s communities,” Stern and Seifert found that

this is a much more difficult task than one might imagine. First, there are significant conceptual problems involved in thinking through exactly how murals might have an impact on neighborhoods. Second, the quality of data available to test hypotheses concerning murals is limited. Finally, there are a number of methodological problems involved in using the right comparisons in assessing the potential impact of murals. For example, how far from a mural might we expect to see an impact? How long after a mural is painted might it take to see an effect and how long might that effect last?…Ultimately, this report concludes that these issues remain a significant impediment to understanding the role of murals.

By comparing data on murals to existing neighborhood quality of life data, Stern and Seifert considered murals’ connection to factors like community economic investment and indicators of more general neighborhood change (such as reduced litter or crime, or residents’ investment in other community organizing activities). The study also measured levels of community investment and involvement in murals. However, the scarce data available on these factors, according to the authors, are difficult to connect directly to public art in a cause and effect relationship. Stern and Seifert’s strongest finding was that murals may build “social capital,” or “networks of relationships” that can promote “individual and group well-being,” because of all the events surrounding mural production in which people can participate. It was more difficult to show a consistent relationship between murals and other theorized outcomes, such as ability to “inspire” passersby or serve as “amenities” for neighborhoods. The study recommends that “more systematic information on their physical characteristics and sites—‘before and after’—would provide a basis for identifying murals that become an amenity.”

A more recent 2009 report on Philadelphia’s commercial corridors by Econoconsult also demonstrated “some indication of a positive correlation” between the presence of murals and shopping corridor success. Murals are described here as “effective and cost efficient ways of replacing eyesores with symbols of care.” However, the report also adds the disclaimer that a positive correlation is not necessarily proof of the murals’ role as the primary cause of a neighborhood’s appeal.

So what can we assess most easily, and how?

My research revealed that quantitative data on short-term inputs and outputs of public art programs is frequently cited (sometimes inappropriately) as evidence of a program’s success in things like reports or funding proposals—for example, number of new projects completed in one year, number of youth or community partners served, or number of mural tour participants. However, in this article I am not really focusing on this type of reporting, as it does not address how public art impacts communities over time.

The good news is that there are several examples of indicators that are more easily measurable in certain types of public art situations, including permanent installations. These include:

- Testimonies on the educational and social impact of collaborative public art projects, from youth and community participants and artists alike

- Qualitative audience responses to public art, including whether or not the art provokes any type of discussion, debate, or controversy

- How a public artwork is treated over time by a community, including whether it gets vandalized, and whether the community takes the initiative to repair or maintain it

- Press coverage

- The “use” of a public artwork by its hosts, e.g. in educational programs or marketing campaigns

- Levels of audience engagement with public art via internet sites and other types of educational programming

Below I will summarize some helpful methods by which data is collected around all these indicators.

Mining the Press

Archiving press coverage of public art projects online is a common practice among organizations, as is presenting pithy press clippings and quotes in funding proposals and marketing materials as a means of demonstrating a project’s success. For researchers, studying articles (and increasingly, blog posts) on past projects can also provide rich documentation of artworks’ immediate effects, as well as points of comparisons. For example, the “comments” sections of online articles and blogs can generate interesting, often unsolicited feedback, albeit from a nonrandom sample.

One possible outcome of public art projects is controversy, which is not always considered a bad thing, despite now-infamous examples of projects like Richard Serra’s Tilted Arc being removed. For example, Sofia Maldonado’s 42nd Street Mural, presented in March 2010 by the Times Square Alliance, provoked extensive coverage on news programs and blogs. The mural’s un-idealized images of Latin American and Caribbean women based on the artist’s own heritage led some women’s and cultural advocacy organizations to call for its removal. The Alliance opted to leave the mural up, and has cited this project as evidence of the Alliance’s commitment to artists’ freedom of expression. The debates led Maldonado to reflect, “as an art piece it has accomplished its purpose: to establish a dialogue among its spectators.”

Sofia Maldonado, “42nd Street Mural,” 2010, Commissioned by the Times Square Alliance Public Art Program.

Site visits and “public art watch”

As an attempt to promote more sustained observation of completed works over time, public art historian Harriet Senie assigns her students in college and graduate level courses a final term paper project every semester that contains a

“public art watch”…For the duration of a semester, on different days of the week, at different times, students observe, eavesdrop, and engage the audience for a specific work of public art. Based on a questionnaire developed in class and modified for individual circumstances, they inquire about personal reactions to this work and to public art in general” (quoted in Sculpture Magazine).

Senie’s students also observe things like people’s interactions with an artwork, such as how often they stop and look up at it, take pictures in front of it, or use it as a meeting place.

Senie maintains that “Although far from ‘scientific,’ the information is based on direct observation over time—precisely what is in short supply for reviewers working on a deadline.” This approach towards challenging college students to think critically about public art has also been implemented in public art courses at NYU and Pratt Institute, and the aggregate results of student research over time are summarized in one of Senie’s longer publications.

I have not encountered any other organizations able to integrate this type of research into their regular operations; however, there may be opportunities to integrate direct observation into routine site visits to completed permanent public artworks.

In the NYC Percent for Art program, and its Public Art for Public Schools (PAPS) wing that commissions permanent art for new and renovated school buildings, staff members are expected to undertake periodic visits “to monitor the condition of artworks that have been commissioned,” according to PAPS director Tania Duvergne. Such “maintenance checks” can provide opportunities to survey building inhabitants or local residents about their opinions and use of the artworks.

Duvergne uses these “condition report” visits as opportunities to further her agency’s mission to “bridge connections between what teachers are already doing in their classrooms and their physical environments.” At each site, she tries to interview custodians, teachers, principals and students about whether the art is well treated, whether they know anything about the artwork (and are using the online resources available to them), and whether they want more information. Duvergne notes that many teachers use the public art in their teaching in some way, even if they do not know a lot about the artwork. While observing a public artwork during a site visit every few years is nowhere near as extensive and sustained observation as Senie’s class assignment, perhaps a similar survey and observation could be undertaken with a wide range of students and staff members over the course of a day.

Project participant and resident surveys

Organizations that create community-based public art usually have specific desired social, educational, or behavioral outcomes in project participants. Mural organizations Groundswell and Chicago Public Art Group describe thorough evaluation processes in which mural artists, youth, community partners and parents are all surveyed and sometimes interviewed before, during and after projects. Groundswell’s community partner post-project survey, for example, asks partners to rank their level of agreement about whether certain community-wide outcomes have been met, such as whether the mural increases the organization’s visibility, increases awareness of an identified issue, and improves community attitudes towards young people.

Groundswell’s theory of change (most recently honed in 2010 through focus groups with youth participants and community partners) articulates various clear desired outputs and outcomes for both youth and community partner organizations. This includes the development of “twenty-first century” life skills in teen mural participants. To measure this impact specifically, Groundswell has made it a priority to continue to track youth participants after they graduate, turn 21, and reach other checkpoints, according to Executive Director Amy Sananman. Groundswell recently hired an outside researcher to build a comprehensive database (using the free program SalesForce), in which participant data and survey results, and data on completed murals (such as whether any were graffitied, how many times they appeared in news articles, etc.) can be entered and compared to generate reports.

In 2006, Philadelphia’s Mural Arts Program conducted a community impact study using audience response questionnaires as a starting point. Then- special projects manager Lindsey Rosenberg employed college students, through partnerships with local universities, to conduct door-to-door surveys of all residents living within a mile radius of four murals. The murals differed by theme, neighborhood, and level of community involvement. The interns orally administered a multiple-choice questionnaire with questions ranging from general opinions of the murals to level of participation in making the murals to perceptions of changes in the neighborhood as a result of the murals. They then inputted the surveys into a computer database specifically created for this study by outside consultants. The database not only calculated percentages of each response to murals, but tracked correlations between these responses and census demographic data, including income level and home ownership.

This research project was different from prior MAP community impact studies in that it assumed that “what people perceive to be the impact of a mural is in itself valuable,” as much as external evidence of change.

In 2007, MAP shared some preliminary results of this endeavor with me to aid my thesis research. At the time the research seemed to generate some useful data on which murals were appreciated most in which neighborhoods, and the correlation between appreciation and community participation in the projects. However, since then I have not been able to gather any further information on this study, or find any published results. I did hear from MAP at the time of the study that only 25% of people who were approached actually took the surveys, indicating just one problematic aspect of conducting such research on a regular basis. The database was also costly.

Most recently, MAP is partnering (page 160) with the Philadelphia Department of Behavioral Health & Mental Retardation Services (DBH/MRS), community psychologists from Yale, and almost a dozen local community agencies and funders with core support from the Robert Wood Johnson Foundation, on “a multi-level, mixed methods comparative outcome trial known as the Porch Light Initiative. The Porch Light Initiative examines the impact of mural making as public art on individual and community recovery, healing, and transformation and utilizes a community-based participatory research (CBPR) framework.” Unfortunately, MAP declined my requests for more information on this new study.

Interviewing youth and community members can of course only generate observations and opinions, but Groundswell at least is also taking the step of also tracking what happens to participants after they complete a mural project. I am still not clear how to prove that any impacts on participants are a direct result of public art projects. Yet surveying project participants and community members about their feelings about a program or project, and how they think they were impacted by it, is one of the most do-able types of research (apart from the challenges of getting people to fill out surveys).

Community-based “proxies”

Groundswell director Amy Sananman has described some success in utilizing community partners as “proxies” for reporting on a mural’s local impact, effectively outsourcing some of the burden of data collection to other organizations. For example, the director of a nonprofit whose storefront has a Groundswell mural could report back to Groundswell on the extent to which local residents take care of the mural, how often people comment on it, etc.

PAPS, CPAG, and ArtBridge, an organization that commissions artwork for vinyl construction barrier banners, have described similar ideas for partnerships. ArtBridge hopes to implement a more formal process in which the owners of stores where its banners are installed can document changes like increased business due to public art. PAPS director Tania Duvergne also cites examples of “successful projects” in which public schools, on their own, designed art gallery displays or teaching curricula around their public art pieces, and shared this with PAPS on site visits.

There might be a danger in depending on community partner organization representatives to speak for the whole “community” or to provide reliable, accurate data. But if cooperative partners can be identified and regular reporting scheduled using consistent measurement tools, the burden of reporting on specific neighborhoods is lessened for the public art organization.

“Smart” Technology

Groundswell, ArtBridge, and MAP are all starting to utilize the new QR code smartphone application, which uses QR codes to direct public art site visitors to websites with more information about the art. Groundswell experimented this past summer with adding QR codes to a series of posters designed by its Voices Her’d Visionaries program to be hung in public schools to educate teens about healthy relationships. Groundswell can then track how many hits the website gets through the QR app. In general, web activity on public art sites is an easy quantitative measure of public interest.

Philadelphia’s Mural Arts Program has a “report damage” section on its website, where anyone who notices a mural in need of repair can alert MAP online. This is also a potential source for quantitative evidence of how many people notice and feel invested in murals.

Use of Interpretive Programming

Public art organizations are increasingly designing interpretive programming around completed artwork, from outdoor guided tours to curated “virtual” artwork displays. NYC’s Metropolitan Transit Authority’s Arts for Transit program provides downloadable podcasts about completed artworks on its website; other organizations include phone numbers to call for guided tours at public art sites themselves (as in many museum exhibits). Both in-person and virtual/phone tours can provide rich opportunities to track usage, collect informal feedback from participants, and solicit feedback via surveys. ArtBridge recently initiated its WALK program giving tours of its outdoor banner installations. After each tour, ArtBridge emails a link to a brief questionnaire to all tour participants, and offers a prize as an incentive for taking the survey.

Concluding remarks: What next for evaluation?

While systematic, reliable quantitative analysis of public art’s impact at the neighborhood level remains challenging and undervalued in the field, new technologies as well as effective partnerships are making it increasingly feasible for public art organizations to assess factors such as audience engagement, benefits to participants, and community stewardship of completed public art works. The Ixia “Guide to Evaluation” offers a useful roadmap for approaching the evaluation of any type of public art project. At the same time, we should not forget the ability of art to affect people in ways that may seem intangible or even immeasurable, or, as Glenn Weiss puts it, “become part of a memory of a community, part of how a community sees itself.”

(Enjoyed this post? We’re raising funds through this Thursday to make the next generation of Createquity possible. We’re getting close, but need your help to cross the finish line. Please consider a tax-deductible donation today!)